It’s time for the big showdown! In this post I continue with my benchmark frenzy and after messing around with ZFS I put those SSDs to the test with FreeNAS. The most interesting part will be, of course, to see how those stand against Storage Spaces. On FreeNAS (FreeBSD) there’s no ATTO Disk Benchmark, obviously, but there’s Iozone. It’s not quite the same because it generates various request lenghts for each chunck size, so I had no better idea than to just average those values. If you think this needs to be improved, just let me know in the comments section. For your reference, I’ve also made the raw numbers available here.

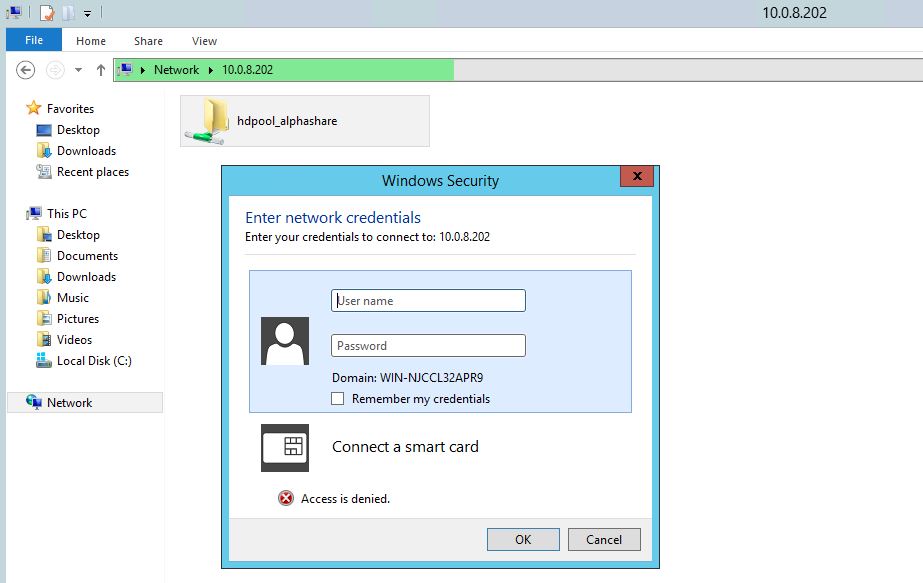

The Dom0 hosts the zfs file system and VMS are allocated storage as needed. Another option is to use iSCSI or even samba to mount your zfs filesystem in windows vm. ZFS is not understood by windows (as /u/VirtualizationFreak pointed out, someone started a project on google code, and it was abandoned). ZFS may never be understood by windows. ISCSI is a BLOCK STORAGE DEVICE - which is not significantly different than SATA, SAS, SCSI or even ATA in terms of what's presented to the OS, and how the OS uses it. ZFS doesn't allow two systems to use it at the same time (unless it is shared through NFS or similar) and booting Windows from ZFS would be another challenge (I bet much more difficult one). Currently there's no good FS that can work together with Windows and Linux/Unix.

Based on my (and others’) previous benchmarks, we already know all too well that while RAID10 performance is pretty decent with Storage Spaces, but parity schemes just suck ass. Let’s see ZFS’ take on the topic.

Nothing too fancy with RAID10 reads. ZFS is maybe a bit more balanced, but that’s it.

Same goes for writes, totally predictable performance. Now it’s time to add a twist to it.

Yeah, with single parity it actually looks like something usable compared to Storage Spaces, but trust me, it’ll only get better.

Quite ridiculous, isn’t it? That’s how we do things in downtown. There’s simply no excuse for Microsoft with this. Storage Spaces is absolutely worthless compared to this. It’s simply humiliated by RAID-Z. Let’s see what’s the deal with double parity.

You may remember that for whatever reason SS RAID6 read performance was considerable better than that of RAID5 and in this case it shows. Overall, it’s about the same throughput as with RAID-Z2. Of course, reads are the least of our problems so check out writes again.

Again, Storage Spaces is annihilated. Would anyone actually want to use it for… anything?

I just wanna show you how incredibly balanced ZFS RAID performance is. This chart also includes RAID-Z3, which has no Storage Spaces equivalent (it’s basically triple parity) so it’s missing from the previous comparisons.

See? I rest my case, this is the most consistent performance ever.

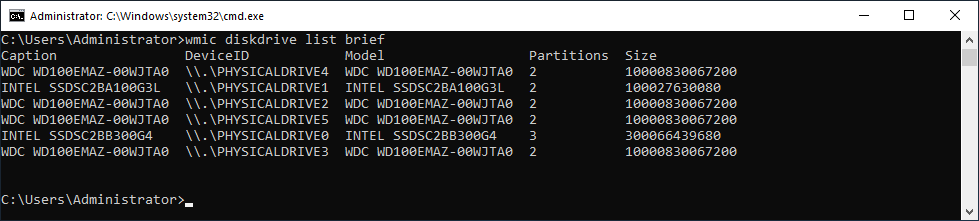

Same goes for writes. In fact, the results are almost too good to believe. Some people on #freenas even suggested that I’m limited by one or more of the buses, but it’s hard to believe given that I connect to the 24 SSDs via 24 SAS ports, those are split between two SAS3 HBA cards, and those 2 cards both go to a PCI-E 3.0 x8 slot. I simply don’t see a bottleneck here.

After seeing these numbers I can only repeat myself: there’s absolutely no excuse for Microsoft. I expressed in my previous post that the traditional parity levels are basically broken. I do believe that Microsoft should seriously consider the idea of incorporating the architecture of ZFS (if not itself) into a future release of Windows Server Storage Spaces. I know, at this point this sounds like blasphemy, but seriously, why not? Of course, you can always reinvent the wheel, but it’d make much more sense to join forces with the existing OpenZFS folks and help each other along the ride. I’m sorry to say, but until you do something along these lines, Storage Spaces will not be a worthy alternative.

Dear Reader! If you have a minute to spare, please cast your vote on Uservoice about this idea:

Thanks a lot, fingers crossed!

Creating a ZFS pool

We can create a ZFS pool using different devices as:

a. using whole disks

b. using disk slices

c. using files

a. Using whole disks

I will not be using the OS disk (disk0).

To destroy the pool :

b. Using disk slices

Now we will create a disk slice on disk c1t1d0 as c1t1d0s0 of size 512 MB.

c. Using files

We can also create a zpool with files. Make sure you give an absolute path while creating a zpool

Creating pools with Different RAID levels

Now we can create a zfs pool with different RAID levels:

1. Dynamic strip – Its a very basic pool which can be created with a single disk or a concatenation of disk. We have already seen zpool creation using a single disk in the example of creating zpool with disks. Lets see how we can create concatenated zfs pool.

This configuration does not provide any redundancy. Hence any disk failure will result in a data loss. Also note that once a disk is added in this fashion to a zfs pool may not be removed from the pool again. Only way to free the disk is to destroy entire pool. This happens due to the dynamic striping nature of the pool which uses both disk to store the data.

2. Mirrored pool

a. 2 way mirror

A mirrored pool provides you the redundancy which enables us to store multiple copies of data on different disks. Here you can also detach a disk from the pool as the data will be available on the another disks.

b. 3 way mirror

2. RAID-Z pools

Now we can also have a pool similar to a RAID-5 configuration called as RAID-Z. RAID-Z are of 3 types raidz1 (single parity) and raidz2 (double parity) and rzidz3 (triple parity). Lets us see how we can configure each type.

Zfs Windows 7

Minimum disk requirements for each type

Minimum disks required for each type of RAID-Z

1. raidz1 – 2 disks

2. raidz2 – 3 disks

3. raidz3 – 4 disks

a. raidz1

b. raidz2

c. raidz3

Adding spare device to zpool

By adding a spare device to a zfs pool the failed disks is automatically replaced by the space device and administrator can replace the failed diks ata later point in time. We can aslo share the spare device among multiple zfs pools.

Make sure you turn on the autoreplace feature (zfs attribute) on the geekpool

Dry run on zpool creation

You can do a dry run and test the result of a pool creation before actually creating it.

Importing and exporting Pools

You may need to migrate the zfs pools between systems. ZFS makes this possible by exporting a pool from one system and importing it to another system.

a. Exporting a ZFS pool

To import a pool you must explicitly export a pool first from the source system. Exporting a pool, writes all the unwritten data to pool and remove all the information of the pool from the source system.

In a case where you have some file systems mounted, you can force the export

b. Importing a ZFS pool

Now we can import the exported pool. To know which pools can be imported, run import command without any options.

As you can see in the output each pool has a unique ID, which comes handy when you have multiple pools with same names. In that case a pool can be imported using the pool ID.

Importing Pools with files

By default import command searches /dev/dsk for pool devices. So to see pools that are importable with files as their devices we can use :

Zfs Windows Driver

Okay all said and done, Now we can import the pool we want :

Similar to export we can force a pool import

Creating a ZFS file system

The best part about zfs is that oracle(or should I say Sun) has kept the commands for it pretty easy to understand and remember. To create a file system fs1 in an existing zfs pool geekpool:

Now by default when you create a filesystem into a pool, it can take up all the space in the pool. So too limit the usage of file system we define reservation and quota. Let us consider an example to understand quota and reservation.

Suppose we assign quota = 500 MB and reservation = 200 MB to the file system fs1. We also create a new file system fs2 without any quota and reservation. So now for fs1 200 MB is reserved out of 1GB (pool size) , which no other file system can have it. It can also take upto 500 MB (quota) out of the pool , but if its is free. So fs2 has right to take up upto 800 MB (1000 MB – 200 MB) of pool space.

So if you don’t want the space of a file system to be taken up by other file system define reservation for it.

One more thing, reservation can’t be greater than quota if it is already defined. On ther other hand when you do a zfs list , you would be able to see the available space for the file system equal to the quota defined for it (if space not occupied by other file systems) and not the reservation as expected.

To set servation and quota on fs1 as stated above:

Zfs For Windows

To set mount point for the file system

By default a mount point (/poolname/fs_name) will be created for the file system if you don’t specify. In our case it was /geekpool/fs1. Also you do not have to have an entry of the mount point in /etc/vfstab as it is stored internally in the metadata of zfs pool and mounted automatically when system boots up. If you want to change the mount point :

Other important attributes

You may also change some other important attributes like compression, sharenfs etc.. Also we can specify attributes while creating the file system itself.